March 18, 2014 - by Simone Ulmer

In the Swiss High Performance and High Productivity Computing (HP2C) project, climate scientists at ETH Zurich and MeteoSchweiz have, over the last four years, revised the application software for their climate and weather models and prepared it for the introduction of graphics processors. They saw an opportunity to calculate high-resolution climate and weather models on the latest supercomputer architecture whilst containing the energy costs. Models of this kind could lead to improved climate forecasts and supply useful information for strategies of adaptation to a changing climate.

“Natural” cloud formation in the model

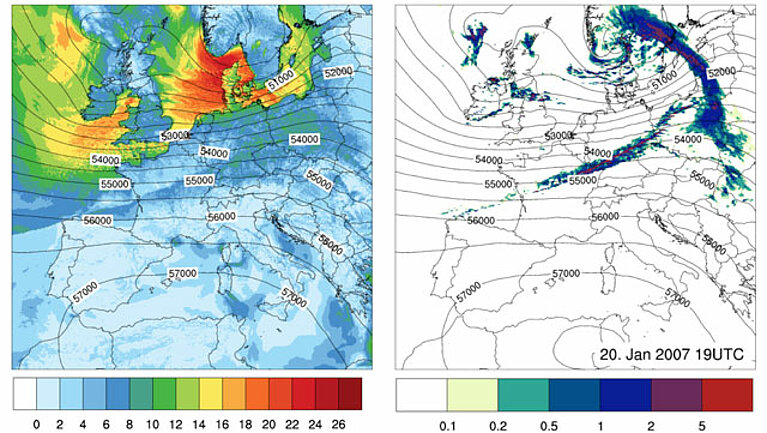

The more closely woven the simulation grid, the more precise results will be which the researchers hope to glean from it. For the research group of climate scientist and ETH Professor Christoph Schär, even higher resolution means “cloud-resolving”. This means that the grid spacing must be so small that convective clouds are depicted like thunderclouds in the model. According to the scientists, the high resolution makes it possible to formulate the model on basic physical principles and thereby avoid many uncertainties. Using the basic physical equations, the model could then generate the convective clouds by itself and depict the most important dynamic processes that lead to their formation, explains David Leutwyler, one of Schär’s doctoral students at the Institute of Atmosphere and Climate Science.

With a cloud-resolving grid spacing of only two kilometres, the researchers would now like to simulate the European climate of the last ten years. The calculation grid encompasses 1500 x 1500 x 60 grid points and covers all of continental Europe. “At the lateral edges, the model is driven by analytical data based on observations”, says Leutwyler. “It corresponds to a resolution of approximately 80 kilometres.” In an intermediate stage, a simulation with a grid spacing of 12 kilometres is embedded, which in turn drives the cloud-resolving two-kilometre simulation. With this fine-tuning of the boundary condition it is possible to avoid non-physical artefacts which may crop up in the case of overly large resolution differences.

Support for adaptation measures

This method allows the scientists to examine how well their new models depict the processes that are relevant to their questions. “If we can be certain that we are able to simulate past time periods well, then this makes us more confident that we will be able to depict future climatic conditions in our model equally well”, says Leutwyler. They would like to simulate this future for Europe with a resolution of 2 kilometres.

The scientists’ objective is initially to adapt the simulation of the past period to the measured conditions in such a way that their model is more refined and capable of reliably simulating future climate. Furthermore, it is also possible to conduct process studies with this model, for instance on how climate change impacts heavy rainfall. Simulations of this kind can support strategies of adaptation to a changing climate. “For example, if I know where heavier rainfall is expected, then I can adapt my wastewater infrastructure in order to avoid flooding”, says Leutwyler.

Successful test on GPUs

On the GPUs of “Piz Daint”, the researchers have reproduced the calculations which they previously carried out on conventional CPUs. “The differences you see in the cloud-resolving simulation over the Alps are in the range of model internal variability”, says Leutwyler. The researchers see this as confirmation that the restructuring and porting of their code has succeeded without any serious errors.

Furthermore, over the last few weeks Leutwyler has been busy conducting test simulations to establish how the grid to be calculated in their project should best be placed over Europe so that all structures, including things like vortexes in air currents, can be depicted.

For the scientists, it is clear that it is the new “Piz Daint” that has made their cloud-resolving project over continental Europe possible, as it can solve ten times more computationally intensive problems. “As the GPUs have a larger memory bandwidth, we reach our goal three times more quickly and are seven times more efficient than we were before”, stresses Leutwyler.